Upgrading GitLab (FREE SELF)

Upgrading GitLab is a relatively straightforward process, but the complexity can increase based on the installation method you have used, how old your GitLab version is, if you're upgrading to a major version, and so on.

Make sure to read the whole page as it contains information related to every upgrade method.

NOTE:

Upgrade GitLab to the latest available patch release, for example 13.8.8 rather than 13.8.0.

This includes versions you must stop at on the upgrade path as there may

be fixes for issues relating to the upgrade process.

The maintenance policy documentation has additional information about upgrading, including:

- How to interpret GitLab product versioning.

- Recommendations on the what release to run.

- How we use patch and security patch releases.

- When we backport code changes.

Upgrade based on installation method

Depending on the installation method and your GitLab version, there are multiple official ways to update GitLab:

- Linux packages (Omnibus GitLab)

- Source installations

- Docker installations

- Kubernetes (Helm) installations

Linux packages (Omnibus GitLab)

The package upgrade guide contains the steps needed to update a package installed by official GitLab repositories.

There are also instructions when you want to update to a specific version.

Installation from source

- Upgrading Community Edition and Enterprise Edition from source - The guidelines for upgrading Community Edition and Enterprise Edition from source.

- Patch versions guide includes the steps needed for a patch version, such as 13.2.0 to 13.2.1, and apply to both Community and Enterprise Editions.

In the past we used separate documents for the upgrading instructions, but we have since switched to using a single document. The old upgrading guidelines can still be found in the Git repository:

Installation using Docker

GitLab provides official Docker images for both Community and Enterprise editions. They are based on the Omnibus package and instructions on how to update them are in a separate document.

Installation using Helm

GitLab can be deployed into a Kubernetes cluster using Helm. Instructions on how to update a cloud-native deployment are in a separate document.

Use the version mapping from the chart version to GitLab version to determine the upgrade path.

Plan your upgrade

See the guide to plan your GitLab upgrade.

Checking for background migrations before upgrading

Certain releases may require different migrations to be finished before you update to the newer version.

Batched migrations are a migration type available in GitLab 14.0 and later. Background migrations and batched migrations are not the same, so you should check that both are complete before updating.

Decrease the time required to complete these migrations by increasing the number of

Sidekiq workers

that can process jobs in the background_migration queue.

Background migrations

For Omnibus installations:

sudo gitlab-rails runner -e production 'puts Gitlab::BackgroundMigration.remaining'

sudo gitlab-rails runner -e production 'puts Gitlab::Database::BackgroundMigrationJob.pending'For installations from source:

cd /home/git/gitlab

sudo -u git -H bundle exec rails runner -e production 'puts Gitlab::BackgroundMigration.remaining'

sudo -u git -H bundle exec rails runner -e production 'puts Gitlab::Database::BackgroundMigrationJob.pending'Batched background migrations

GitLab 14.0 introduced batched background migrations.

Some installations may need to run GitLab 14.0 for at least a day to complete the database changes introduced by that upgrade.

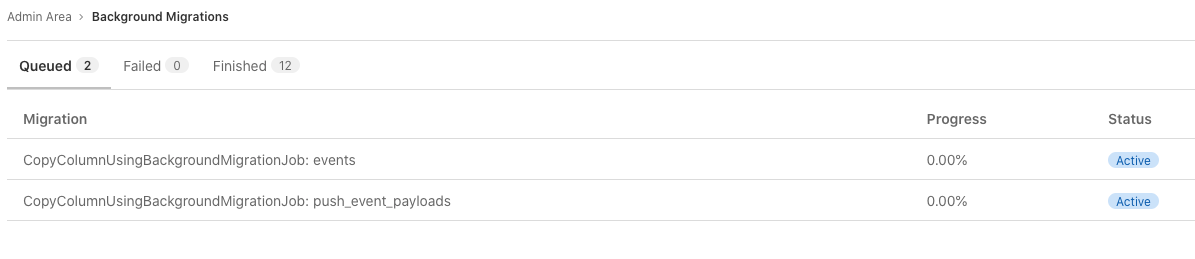

Check the status of batched background migrations

To check the status of batched background migrations:

-

On the top bar, select Menu > Admin.

-

On the left sidebar, select Monitoring > Background Migrations.

All migrations must have a Finished status before you upgrade GitLab.

The status of batched background migrations can also be queried directly in the database.

-

Log into a

psqlprompt according to the directions for your instance's installation method (for example,sudo gitlab-psqlfor Omnibus installations). -

Run the following query in the

psqlsession to see details on incomplete batched background migrations:select job_class_name, table_name, column_name, job_arguments from batched_background_migrations where status <> 3;

If the migrations are not finished and you try to update to a later version, GitLab prompts you with an error:

Expected batched background migration for the given configuration to be marked as 'finished', but it is 'active':If you get this error, check the batched background migration options to complete the upgrade.

What do I do if my background migrations are stuck?

WARNING: The following operations can disrupt your GitLab performance.

It is safe to re-execute these commands, especially if you have 1000+ pending jobs which would likely overflow your runtime memory.

For Omnibus installations

# Start the rails console

sudo gitlab-rails c

# Execute the following in the rails console

scheduled_queue = Sidekiq::ScheduledSet.new

pending_job_classes = scheduled_queue.select { |job| job["class"] == "BackgroundMigrationWorker" }.map { |job| job["args"].first }.uniq

pending_job_classes.each { |job_class| Gitlab::BackgroundMigration.steal(job_class) }For installations from source

# Start the rails console

sudo -u git -H bundle exec rails RAILS_ENV=production

# Execute the following in the rails console

scheduled_queue = Sidekiq::ScheduledSet.new

pending_job_classes = scheduled_queue.select { |job| job["class"] == "BackgroundMigrationWorker" }.map { |job| job["args"].first }.uniq

pending_job_classes.each { |job_class| Gitlab::BackgroundMigration.steal(job_class) }Batched migrations (GitLab 14.0 and newer):

See troubleshooting batched background migrations.

Dealing with running CI/CD pipelines and jobs

If you upgrade your GitLab instance while the GitLab Runner is processing jobs, the trace updates fail. When GitLab is back online, the trace updates should self-heal. However, depending on the error, the GitLab Runner either retries or eventually terminates job handling.

As for the artifacts, the GitLab Runner attempts to upload them three times, after which the job eventually fails.

To address the above two scenario's, it is advised to do the following prior to upgrading:

- Plan your maintenance.

- Pause your runners.

- Wait until all jobs are finished.

- Upgrade GitLab.

Checking for pending Advanced Search migrations (PREMIUM SELF)

This section is only applicable if you have enabled the Elasticsearch integration (PREMIUM SELF).

Major releases require all Advanced Search migrations to be finished from the most recent minor release in your current version before the major version upgrade. You can find pending migrations by running the following command:

For Omnibus installations

sudo gitlab-rake gitlab:elastic:list_pending_migrationsFor installations from source

cd /home/git/gitlab

sudo -u git -H bundle exec rake gitlab:elastic:list_pending_migrationsWhat do I do if my Advanced Search migrations are stuck?

See how to retry a halted migration.

Upgrading without downtime

Read how to upgrade without downtime.

Upgrading to a new major version

Upgrading the major version requires more attention. Backward-incompatible changes and migrations are reserved for major versions. Follow the directions carefully as we cannot guarantee that upgrading between major versions is seamless.

It is required to follow the following upgrade steps to ensure a successful major version upgrade:

- Upgrade to the latest minor version of the preceding major version.

- Upgrade to the next major version (

X.0.Z). - Upgrade to its first minor version (

X.1.Z). - Proceed with upgrading to a newer releases of that major version.

Identify a supported upgrade path.

It's also important to ensure that any background migrations have been fully completed before upgrading to a new major version.

If you have enabled the Elasticsearch integration (PREMIUM SELF), then ensure all Advanced Search migrations are completed in the last minor version within your current version before proceeding with the major version upgrade.

If your GitLab instance has any runners associated with it, it is very important to upgrade GitLab Runner to match the GitLab minor version that was upgraded to. This is to ensure compatibility with GitLab versions.

Upgrade paths

Upgrading across multiple GitLab versions in one go is only possible by accepting downtime. The following examples assume downtime is acceptable while upgrading. If you don't want any downtime, read how to upgrade with zero downtime.

Find where your version sits in the upgrade path below, and upgrade GitLab accordingly, while also consulting the version-specific upgrade instructions:

8.11.Z -> 8.12.0 -> 8.17.7 -> 9.5.10 -> 10.8.7 -> 11.11.8 -> 12.0.12 -> 12.1.17 -> 12.10.14 -> 13.0.14 -> 13.1.11 -> 13.8.8 -> latest 13.12.Z -> latest 14.0.Z -> latest 14.1.Z -> latest 14.Y.Z

The following table, while not exhaustive, shows some examples of the supported upgrade paths. Additional steps between the mentioned versions are possible. We list the minimally necessary steps only.

| Target version | Your version | Supported upgrade path | Note |

|---|---|---|---|

14.2.6 |

13.10.2 |

13.10.2 -> 13.12.12 -> 14.0.11 -> 14.1.8 -> 14.2.6

|

Three intermediate versions are required: 13.12, 14.0, and 14.1, then 14.2.6. |

14.1.8 |

13.9.2 |

13.9.2 -> 13.12.12 -> 14.0.11 -> 14.1.8

|

Two intermediate versions are required: 13.12 and 14.0, then 14.1.8. |

13.12.10 |

12.9.2 |

12.9.2 -> 12.10.14 -> 13.0.14 -> 13.1.11 -> 13.8.8 -> 13.12.10

|

Four intermediate versions are required: 12.10, 13.0, 13.1 and 13.8.8, then 13.12.10. |

13.2.10 |

11.5.0 |

11.5.0 -> 11.11.8 -> 12.0.12 -> 12.1.17 -> 12.10.14 -> 13.0.14 -> 13.1.11 -> 13.2.10

|

Six intermediate versions are required: 11.11, 12.0, 12.1, 12.10, 13.0 and 13.1, then 13.2.10. |

12.10.14 |

11.3.4 |

11.3.4 -> 11.11.8 -> 12.0.12 -> 12.1.17 -> 12.10.14

|

Three intermediate versions are required: 11.11, 12.0 and 12.1, then 12.10.14. |

12.9.5 |

10.4.5 |

10.4.5 -> 10.8.7 -> 11.11.8 -> 12.0.12 -> 12.1.17 -> 12.9.5

|

Four intermediate versions are required: 10.8, 11.11, 12.0 and 12.1, then 12.9.5. |

12.2.5 |

9.2.6 |

9.2.6 -> 9.5.10 -> 10.8.7 -> 11.11.8 -> 12.0.12 -> 12.1.17 -> 12.2.5

|

Five intermediate versions are required: 9.5, 10.8, 11.11, 12.0, and 12.1, then 12.2.5. |

11.3.4 |

8.13.4 |

8.13.4 -> 8.17.7 -> 9.5.10 -> 10.8.7 -> 11.3.4

|

8.17.7 is the last version in version 8, 9.5.10 is the last version in version 9, 10.8.7 is the last version in version 10. |

Upgrading between editions

GitLab comes in two flavors: Community Edition which is MIT licensed, and Enterprise Edition which builds on top of the Community Edition and includes extra features mainly aimed at organizations with more than 100 users.

Below you can find some guides to help you change GitLab editions.

Community to Enterprise Edition

NOTE: The following guides are for subscribers of the Enterprise Edition only.

If you wish to upgrade your GitLab installation from Community to Enterprise Edition, follow the guides below based on the installation method:

- Source CE to EE update guides - The steps are very similar to a version upgrade: stop the server, get the code, update configuration files for the new functionality, install libraries and do migrations, update the init script, start the application and check its status.

- Omnibus CE to EE - Follow this guide to update your Omnibus GitLab Community Edition to the Enterprise Edition.

Enterprise to Community Edition

If you need to downgrade your Enterprise Edition installation back to Community Edition, you can follow this guide to make the process as smooth as possible.

Version-specific upgrading instructions

Each month, major, minor or patch releases of GitLab are published along with a release post. You should read the release posts for all versions you're passing over. At the end of major and minor release posts, there are three sections to look for specifically:

- Deprecations

- Removals

- Important notes on upgrading

These include:

- Steps you need to perform as part of an upgrade. For example 8.12 required the Elasticsearch index to be recreated. Any older version of GitLab upgrading to 8.12 or higher would require this.

- Changes to the versions of software we support such as ceasing support for IE11 in GitLab 13.

Apart from the instructions in this section, you should also check the installation-specific upgrade instructions, based on how you installed GitLab:

NOTE: Specific information that follow related to Ruby and Git versions do not apply to Omnibus installations and Helm Chart deployments. They come with appropriate Ruby and Git versions and are not using system binaries for Ruby and Git. There is no need to install Ruby or Git when utilizing these two approaches.

14.5.0

-

When

makeis run, Gitaly builds are now created in_build/binand no longer in the root directory of the source directory. If you are using a source install, update paths to these binaries in your systemd unit files or init scripts by following the documentation. -

Connections between Workhorse and Gitaly use the Gitaly

backchannelprotocol by default. If you deployed a gRPC proxy between Workhorse and Gitaly, Workhorse can no longer connect. As a workaround, disable the temporaryworkhorse_use_sidechannelfeature flag. If you need a proxy between Workhorse and Gitaly, use a TCP proxy. If you have feedback about this change, please go to this issue. -

In 14.1 we introduced a background migration that changes how we store merge request diff commits in order to significantly reduce the amount of storage needed. In 14.5 we introduce a set of migrations that wrap up this process by making sure that all remaining jobs over the

merge_request_diff_commitstable are completed. These jobs will have already been processed in most cases so that no extra time is necessary during an upgrade to 14.5. But if there are remaining jobs, the deployment may take a few extra minutes to complete.All merge request diff commits will automatically incorporate these changes, and there are no additional requirements to perform the upgrade. Existing data in the

merge_request_diff_commitstable remains unpacked until you runVACUUM FULL merge_request_diff_commits. But note that theVACUUM FULLoperation locks and rewrites the entiremerge_request_diff_commitstable, so the operation takes some time to complete and it blocks access to this table until the end of the process. We advise you to only run this command while GitLab is not actively used or it is taken offline for the duration of the process. The time it takes to complete depends on the size of the table, which can be obtained by usingselect pg_size_pretty(pg_total_relation_size('merge_request_diff_commits'));.For more information, refer to this issue.

14.4.0

- Git 2.33.x and later is required. We recommend you use the Git version provided by Gitaly.

- See Maintenance mode issue in GitLab 13.9 to 14.4.

- After enabling database load balancing by default in 14.4.0, we found an issue where cron jobs would not work if the connection to PostgreSQL was severed, as Sidekiq would continue using a bad connection. Geo and other features that rely on cron jobs running regularly do not work until Sidekiq is restarted. We recommend upgrading to GitLab 14.4.3 and later if this issue affects you.

14.3.0

-

Instances running 14.0.0 - 14.0.4 should not upgrade directly to GitLab 14.2 or later.

-

Ensure batched background migrations finish before upgrading to 14.3.Z from earlier GitLab 14 releases.

-

Ruby 2.7.4 is required. Refer to the Ruby installation instructions for how to proceed.

-

GitLab 14.3.0 contains post-deployment migrations to address Primary Key overflow risk for tables with an integer PK for the tables listed below:

If the migrations are executed as part of a no-downtime deployment, there's a risk of failure due to lock conflicts with the application logic, resulting in lock timeout or deadlocks. In each case, these migrations are safe to re-run until successful:

# For Omnibus GitLab sudo gitlab-rake db:migrate # For source installations sudo -u git -H bundle exec rake db:migrate RAILS_ENV=production

14.2.0

-

Instances running 14.0.0 - 14.0.4 should not upgrade directly to GitLab 14.2 or later.

-

Ensure batched background migrations finish before upgrading to 14.2.Z from earlier GitLab 14 releases.

-

GitLab 14.2.0 contains background migrations to address Primary Key overflow risk for tables with an integer PK for the tables listed below:

ci_build_needsci_build_trace_chunksci_builds_runner_sessiondeploymentsgeo_job_artifact_deleted_eventspush_event_payloads-

ci_job_artifacts:

If the migrations are executed as part of a no-downtime deployment, there's a risk of failure due to lock conflicts with the application logic, resulting in lock timeout or deadlocks. In each case, these migrations are safe to re-run until successful:

# For Omnibus GitLab sudo gitlab-rake db:migrate # For source installations sudo -u git -H bundle exec rake db:migrate RAILS_ENV=production

14.1.0

-

Instances running 14.0.0 - 14.0.4 should not upgrade directly to GitLab 14.2 or later but can upgrade to 14.1.Z.

It is not required for instances already running 14.0.5 (or higher) to stop at 14.1.Z. 14.1 is included on the upgrade path for the broadest compatibility with self-managed installations, and ensure 14.0.0-14.0.4 installations do not encounter issues with batched background migrations.

14.0.0

-

Database changes made by the upgrade to GitLab 14.0 can take hours or days to complete on larger GitLab instances. These batched background migrations update whole database tables to mitigate primary key overflow and must be finished before upgrading to GitLab 14.2 or higher.

-

Due to an issue where

BatchedBackgroundMigrationWorkerswere not working for self-managed instances, a fix was created that requires an update to at least 14.0.5. The fix was also released in 14.1.0.After you update to 14.0.5 or a later 14.0 patch version, batched background migrations need to finish before you update to a later version.

If the migrations are not finished and you try to update to a later version, you'll see an error like:

Expected batched background migration for the given configuration to be marked as 'finished', but it is 'active':See how to resolve this error.

-

In GitLab 13.3 some pipeline processing methods were deprecated and this code was completely removed in GitLab 14.0. If you plan to upgrade from GitLab 13.2 or older directly to 14.0 (unsupported), you should not have any pipelines running when you upgrade or the pipelines might report the wrong status when the upgrade completes. You should instead follow a supported upgrade path.

-

The support of PostgreSQL 11 has been dropped. Make sure to update your database to version 12 before updating to GitLab 14.0.

Upgrading to later 14.Y releases

- Instances running 14.0.0 - 14.0.4 should not upgrade directly to GitLab 14.2 or later,

because of batched background migrations.

- Upgrade first to either:

- 14.0.5 or a later 14.0.Z patch release.

- 14.1.0 or a later 14.1.Z patch release.

- Batched background migrations need to finish before you update to a later version and may take longer than usual.

- Upgrade first to either:

13.12.0

See Maintenance mode issue in GitLab 13.9 to 14.4.

13.11.0

-

Git 2.31.x and later is required. We recommend you use the Git version provided by Gitaly.

13.10.0

See Maintenance mode issue in GitLab 13.9 to 14.4.

13.9.0

-

We've detected an issue with a column rename that prevents upgrades to GitLab 13.9.0, 13.9.1, 13.9.2, and 13.9.3 when following the zero-downtime steps. It is necessary to perform the following additional steps for the zero-downtime upgrade:

-

Before running the final

sudo gitlab-rake db:migratecommand on the deploy node, execute the following queries using the PostgreSQL console (orsudo gitlab-psql) to drop the problematic triggers:drop trigger trigger_e40a6f1858e6 on application_settings; drop trigger trigger_0d588df444c8 on application_settings; drop trigger trigger_1572cbc9a15f on application_settings; drop trigger trigger_22a39c5c25f3 on application_settings; -

Run the final migrations:

sudo gitlab-rake db:migrate

If you have already run the final

sudo gitlab-rake db:migratecommand on the deploy node and have encountered the column rename issue, you see the following error:-- remove_column(:application_settings, :asset_proxy_whitelist) rake aborted! StandardError: An error has occurred, all later migrations canceled: PG::DependentObjectsStillExist: ERROR: cannot drop column asset_proxy_whitelist of table application_settings because other objects depend on it DETAIL: trigger trigger_0d588df444c8 on table application_settings depends on column asset_proxy_whitelist of table application_settingsTo work around this bug, follow the previous steps to complete the update. More details are available in this issue.

-

-

For GitLab Enterprise Edition customers, we noticed an issue when subscription expiration is upcoming, and you create new subgroups and projects. If you fall under that category and get 500 errors, you can work around this issue:

-

SSH into you GitLab server, and open a Rails console:

sudo gitlab-rails console -

Disable the following features:

Feature.disable(:subscribable_subscription_banner) Feature.disable(:subscribable_license_banner) -

Restart Puma or Unicorn:

#For installations using Puma sudo gitlab-ctl restart puma #For installations using Unicorn sudo gitlab-ctl restart unicorn

-

13.8.8

GitLab 13.8 includes a background migration to address an issue with duplicate service records. If duplicate services are present, this background migration must complete before a unique index is applied to the services table, which was introduced in GitLab 13.9. Upgrades from GitLab 13.8 and earlier to later versions must include an intermediate upgrade to GitLab 13.8.8 and must wait until the background migrations complete before proceeding.

If duplicate services are still present, an upgrade to 13.9.x or later results in a failed upgrade with the following error:

PG::UniqueViolation: ERROR: could not create unique index "index_services_on_project_id_and_type_unique"

DETAIL: Key (project_id, type)=(NNN, ServiceName) is duplicated.13.6.0

Ruby 2.7.2 is required. GitLab does not start with Ruby 2.6.6 or older versions.

The required Git version is Git v2.29 or higher.

13.4.0

GitLab 13.4.0 includes a background migration to move all remaining repositories in legacy storage to hashed storage. There are known issues with this migration which are fixed in GitLab 13.5.4 and later. If possible, skip 13.4.0 and upgrade to 13.5.4 or higher instead. Note that the migration can take quite a while to run, depending on how many repositories must be moved. Be sure to check that all background migrations have completed before upgrading further.

13.3.0

The recommended Git version is Git v2.28. The minimum required version of Git v2.24 remains the same.

13.2.0

GitLab installations that have multiple web nodes must be upgraded to 13.1 before upgrading to 13.2 (and later) due to a breaking change in Rails that can result in authorization issues.

GitLab 13.2.0 remediates an email verification bypass. After upgrading, if some of your users are unexpectedly encountering 404 or 422 errors when signing in, or "blocked" messages when using the command line, their accounts may have been un-confirmed. In that case, please ask them to check their email for a re-confirmation link. For more information, see our discussion of Email confirmation issues.

GitLab 13.2.0 relies on the btree_gist extension for PostgreSQL. For installations with an externally managed PostgreSQL setup, please make sure to

install the extension manually before upgrading GitLab if the database user for GitLab

is not a superuser. This is not necessary for installations using a GitLab managed PostgreSQL database.

13.1.0

In 13.1.0, you must upgrade to either:

- At least Git v2.24 (previously, the minimum required version was Git v2.22).

- The recommended Git v2.26.

Failure to do so results in internal errors in the Gitaly service in some RPCs due

to the use of the new --end-of-options Git flag.

Additionally, in GitLab 13.1.0, the version of Rails was upgraded from 6.0.3 to 6.0.3.1. The Rails upgrade included a change to CSRF token generation which is not backwards-compatible - GitLab servers with the new Rails version generate CSRF tokens that are not recognizable by GitLab servers with the older Rails version - which could cause non-GET requests to fail for multi-node GitLab installations.

So, if you are using multiple Rails servers and specifically upgrading from 13.0, all servers must first be upgraded to 13.1.Z before upgrading to 13.2.0 or later:

-

Ensure all GitLab web nodes are running GitLab 13.1.Z.

-

Enable the

global_csrf_tokenfeature flag to enable new method of CSRF token generation:Feature.enable(:global_csrf_token) -

Only then, continue to upgrade to later versions of GitLab.

12.2.0

In 12.2.0, we enabled Rails' authenticated cookie encryption. Old sessions are automatically upgraded.

However, session cookie downgrades are not supported. So after upgrading to 12.2.0, any downgrades would result to all sessions being invalidated and users are logged out.

12.1.0

If you are planning to upgrade from 12.0.Z to 12.10.Z, it is necessary to

perform an intermediary upgrade to 12.1.Z before upgrading to 12.10.Z to

avoid issues like #215141.

12.0.0

In 12.0.0 we made various database related changes. These changes require that users first upgrade to the latest 11.11 patch release. After upgraded to 11.11.Z, users can upgrade to 12.0.Z. Failure to do so may result in database migrations not being applied, which could lead to application errors.

It is also required that you upgrade to 12.0.Z before moving to a later version of 12.Y.

Example 1: you are currently using GitLab 11.11.8, which is the latest patch release for 11.11.Z. You can upgrade as usual to 12.0.Z.

Example 2: you are currently using a version of GitLab 10.Y. To upgrade, first upgrade to the last 10.Y release (10.8.7) then the last 11.Y release (11.11.8). After upgraded to 11.11.8 you can safely upgrade to 12.0.Z.

See our documentation on upgrade paths for more information.

Maintenance mode issue in GitLab 13.9 to 14.4

When Maintenance mode is enabled, users cannot sign in with SSO, SAML, or LDAP.

Users who were signed in before Maintenance mode was enabled will continue to be signed in. If the admin who enabled Maintenance mode loses their session, then they will not be able to disable Maintenance mode via the UI. In that case, you can disable Maintenance mode via the API or Rails console.

This bug was fixed in GitLab 14.5.0, and is expected to be backported to GitLab 14.3 and 14.4.

Miscellaneous

- MySQL to PostgreSQL guides you through migrating your database from MySQL to PostgreSQL.

- Restoring from backup after a failed upgrade

- Upgrading PostgreSQL Using Slony, for upgrading a PostgreSQL database with minimal downtime.

- Managing PostgreSQL extensions